De toekomst van Machine Learning: een vraaggesprek met Daniel Situnayake

Working at Google

Abate: You spent almost three years with Google. Before we get into the details about your positions there, can you tell us a bit about what it was like joining such an impressive company?Situnayake: After five years building a farming company — which involved more time spent on construction work than it did on coding — I wanted to get back in at the deep end, and there was no company I admired technically more than Google.

After my first few days, I was blown away. I’d never worked at a large tech company before. With your Google badge, you can access facilities at any Google office, anywhere in the world, so it felt like I had become a citizen of a strange new type of country, with its own public services, infrastructure, and government.

Google has an amazing culture of freedom: you can pretty much work on whatever you want, as long as it makes an impact on your product. If you have a cool idea, you’re encouraged to pursue it, and to bring other folks on board to help. This leads to a huge number of exciting projects, but it can also be a bit overwhelming: with all this choice, how do you decide where to apply yourself?

This is where things get a bit tricky. Because there is always so much going on, there are potentially endless meetings, distractions, and things to think about. The first year or so of working at Google is about developing your inner filter so you don’t feel overwhelmed by all the noise.

It’s a bit of a myth that everyone working at Google is some kind of genius. There’s definitely the same range of abilities inside of Google as in the rest of the tech industry. I’ve never been big on hero worship, and it’s a nice feeling when you meet some of the top folks in your field and realize that they’re normal people, just like you.

Abate: You worked at Google as a Developer Advocate for TensorFlow Lite. What is TensorFlow Lite? And how did you educate developers? Though meetups? Online courses?

Situnayake: TensorFlow is the name for Google’s ecosystem of open-source tools for training, evaluating, and deploying deep learning models. It includes everything from the high-level Python code used to define model architectures all the way down to the low-level code used to execute those models on different types of processors.

TensorFlow Lite is the subset of TensorFlow tools that deal with deploying models to so-called “edge devices,” which includes everything smaller than a personal computer: phones, microcontrollers, and more. The TensorFlow Lite tools can take a deep learning model and optimize it so that it is better suited to run on these types of devices. It also includes the highly optimized code that runs on the devices themselves.

My favorite part of TensorFlow Lite is the stripped-down, super-fast variant that is designed to run deep learning models on tiny, cheap, low-power microcontrollers. Prior to the launch of TensorFlow Lite for Microcontrollers, developers had to write their own low-level code for running machine learning models on embedded devices. This made the barrier to entry for on-device machine learning incredibly high. TensorFlow’s entry into this field knocked down a lot of walls, so suddenly anyone with some embedded experience could run models. It was very exciting to see the field of TinyML spring to life.

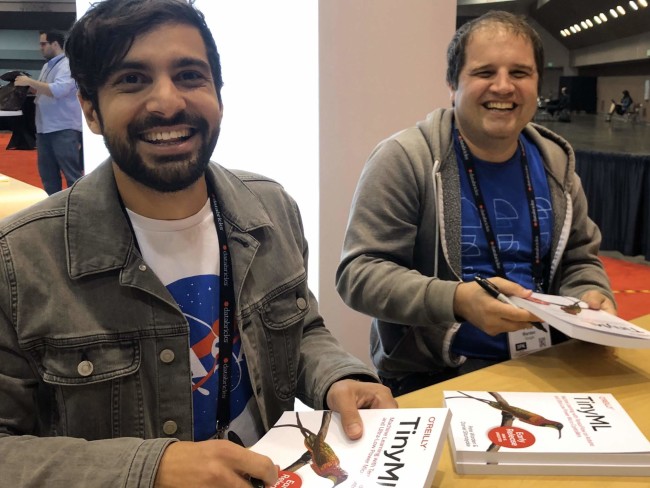

My role was to help the TensorFlow Lite team understand and connect with the developer ecosystem. All developers are different, so it’s important to create multiple ways for them to engage. We did everything you can imagine, from in-person meetups and Google-funded conferences through to building easy to understand example code and writing the TinyML book!

Situnayake: Working with TensorFlow developers, I got to see that even the best machine learning tools and libraries require a huge amount of education to use. Despite some excellent courses and content, the required time investment puts machine learning out of reach for a great number of busy engineers. Training models is as much an art as a science, and the instincts and best practices take years to learn. Things are even harder with TinyML, because it’s such a new field, and the constraints are not well known.

When I heard about Edge Impulse, the company I left Google to join, I was blown away. Even before the official launch, the founders had managed to build a product that allowed any developer to train deep learning models that would run on embedded hardware. I could tell from my work with the developer community that they had cracked some of the toughest problems in the field, abstracting away the pain points that make machine learning tools difficult to work with.

I believe that embedded machine learning is a once-in-a-generation technology. It’s going to transform the world around us in ways that we have yet to realize. Edge Impulse was founded to get this technology into the hands of every engineer, so they can use their diverse perspectives and deep domain knowledge to solve complex problems in every part of our world.

As soon as I realized this potential, I decided to jump on board. It was sad leaving my friends at Google, but TinyML is a small world, and I still work closely with many of them!

Read full article

Hide full article

Discussie (0 opmerking(en))